Build Now While Others Fall Behind: How to Use The Latest AI Models

Level 2 - Value Investor

Welcome Avatar! We like to bring on tech specific people from time to time. They give a different perspective on how tools are being used and what they are seeing. This is entirely a guest post by BowTiedFox. Everything written here is done by Fox with no editing other than formatting and spell check.

On that note handing it over!

Introduction

Are you surviving the robot takeover?

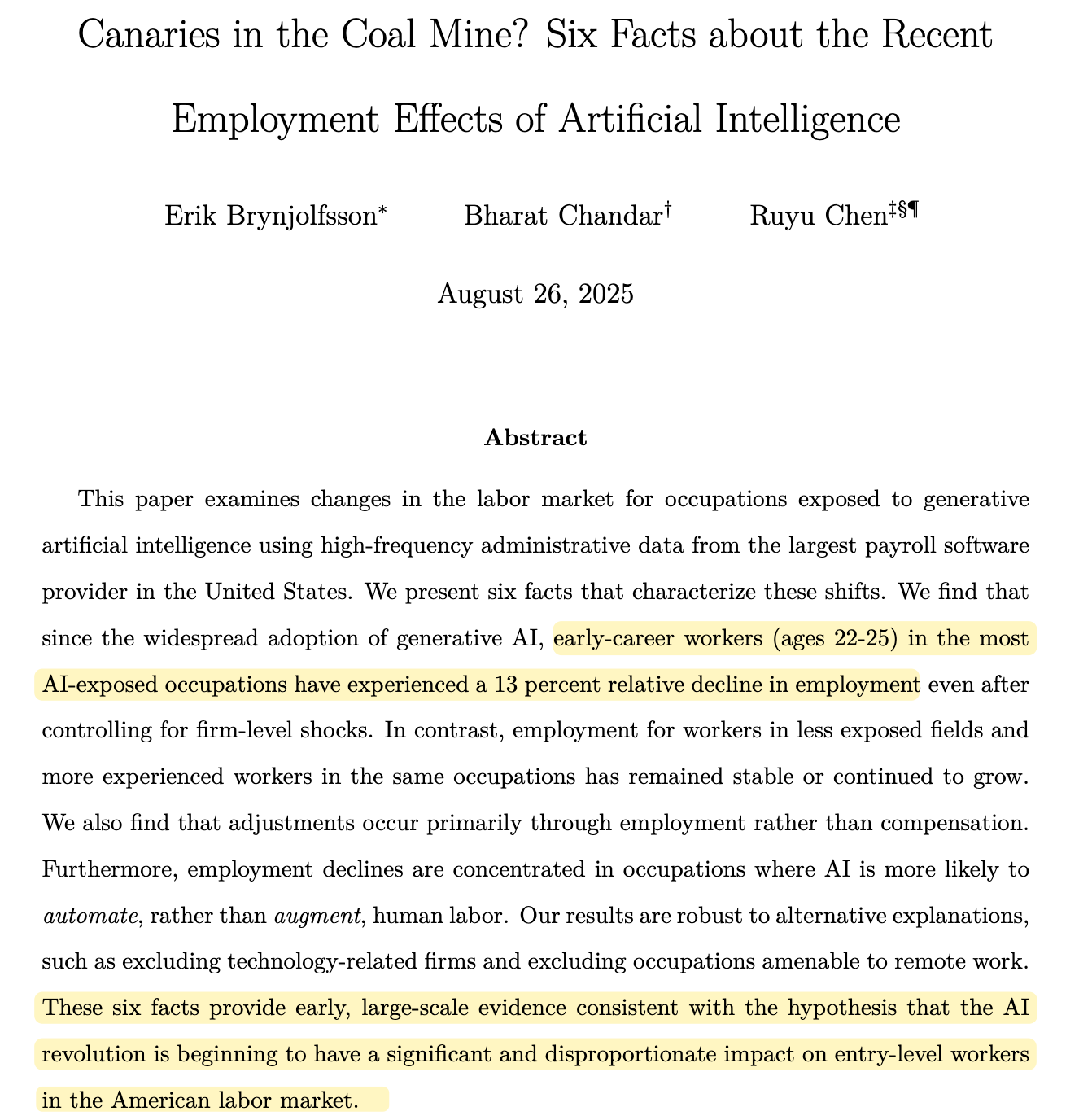

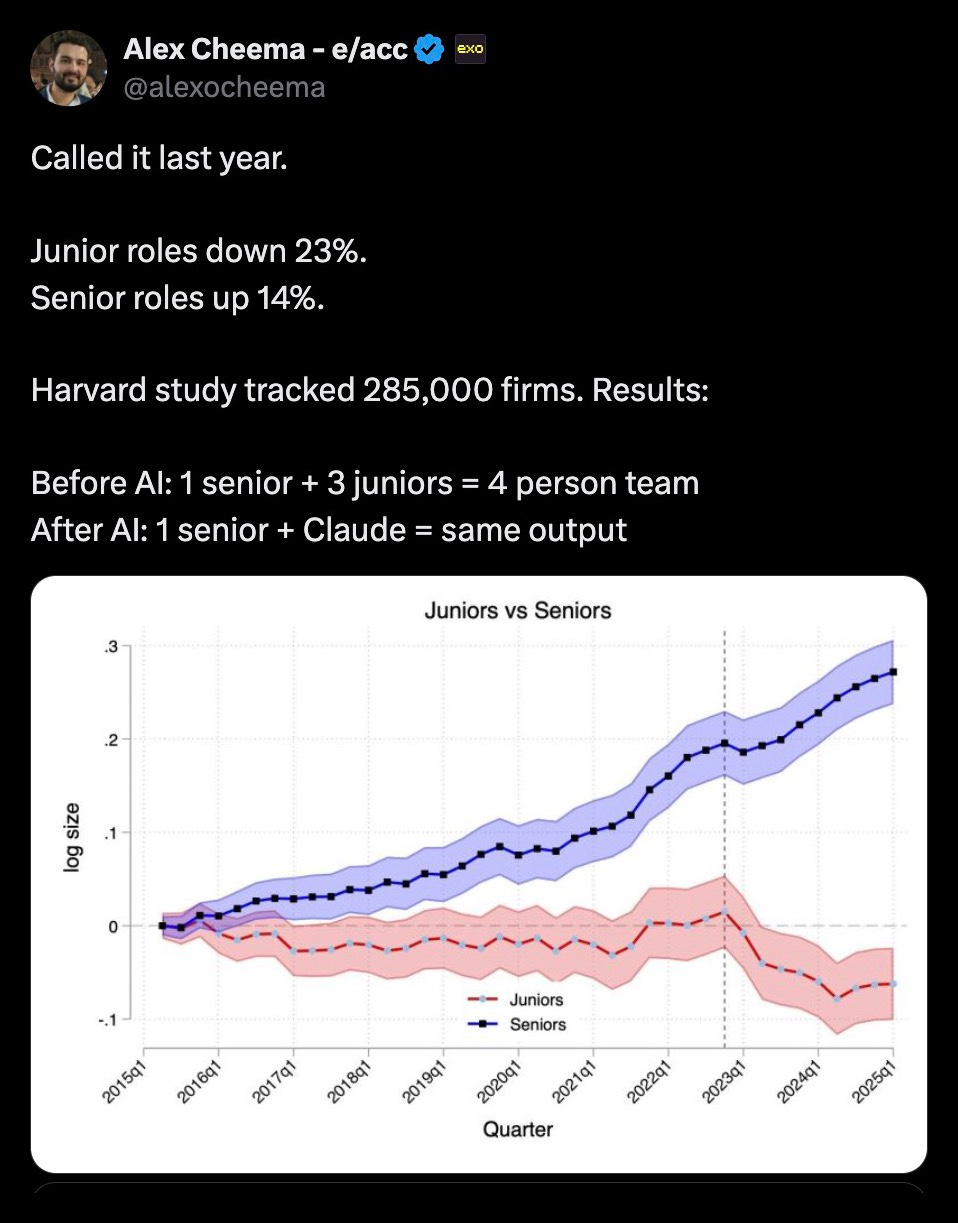

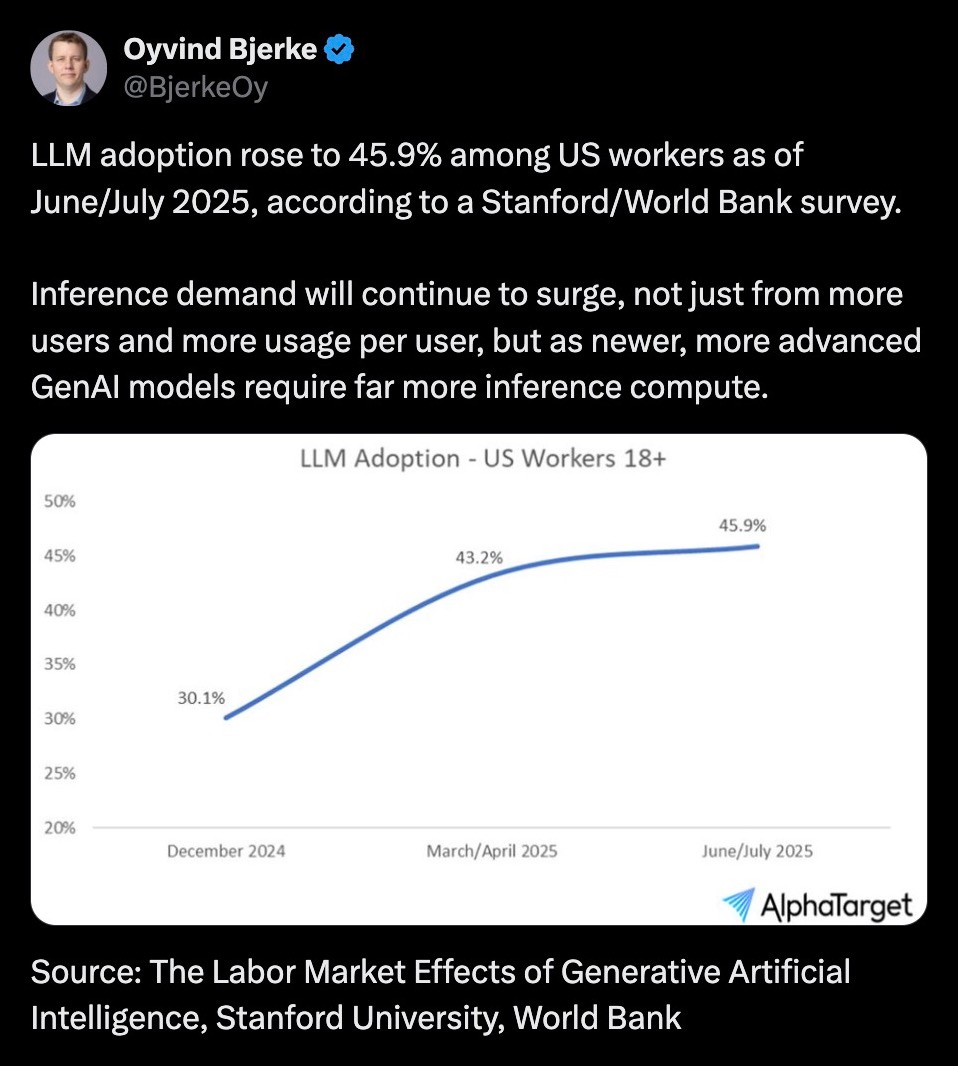

Yes, mass automation isn’t happening right away. But AI is letting senior-level employees do more work with fewer juniors. Stanford University estimates that the decline in opportunities is about 13% and only getting worse

Employment prospects for the youth have never been more grim. However, there has never been a better time to start your own business since the web boom

This is especially true if you keep up-to-date with the latest AI tricks before everyone else finds out. Use firearms while others swing swords. Money loves speed

The advantage of building with AI is that your product improves automatically as the AI models improve. Plus, corporations are still lagging behind in tech adoption, so there’s still opportunity for entrepreneurs to get ahead

I will cover a number of new AI updates relevant to business. But the most important release is ChatGPT-5. I will cover the other updates on my website bowtiedfox.com

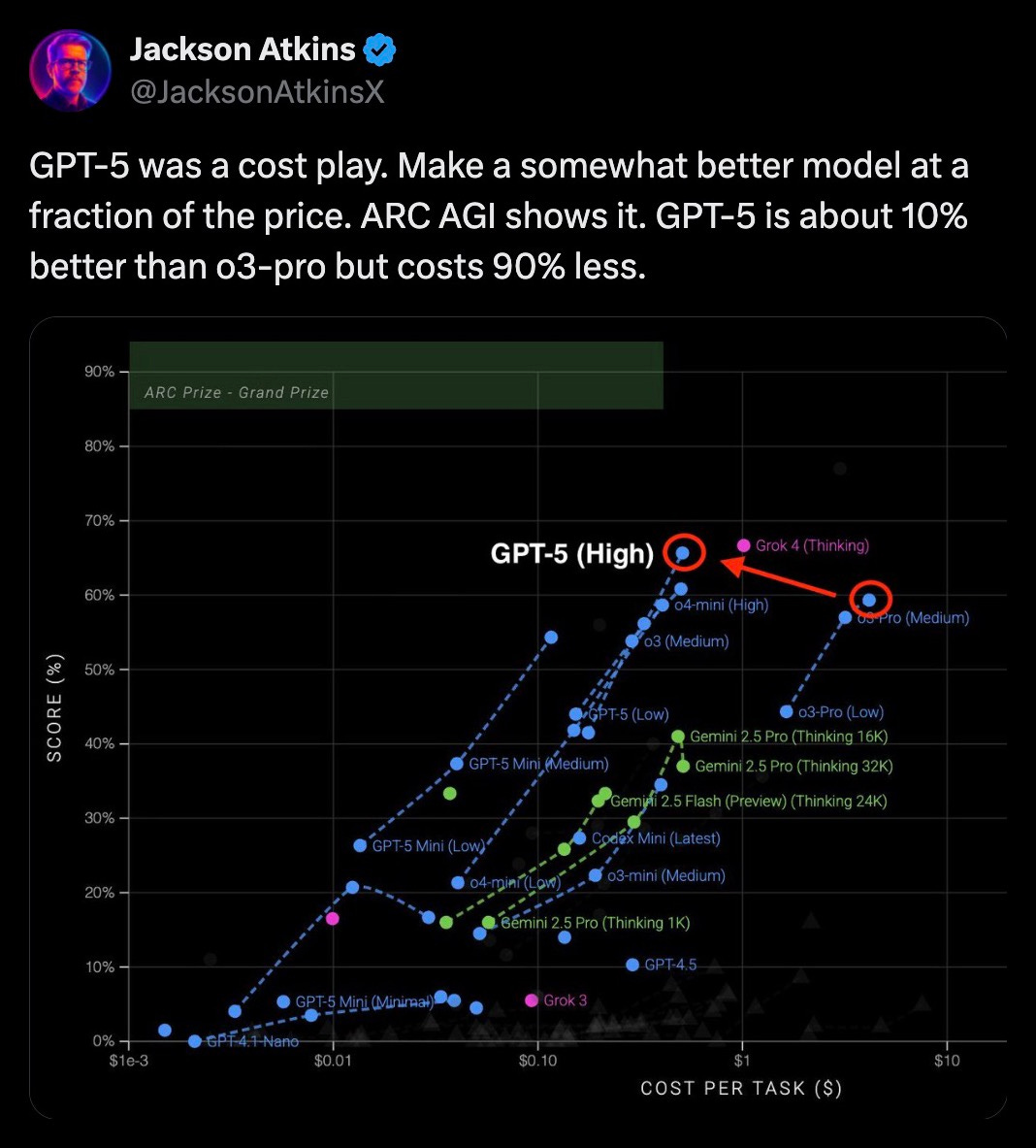

The Only Update That Matters: GPT-5

One new model, two modes: Instant and Thinking

Cleaner Stack: GPT-5 replaced all of OpenAI’s other models to make it easier for the average consumer. Previously, you’d have to decide between 4o, 4.5, 4.1, o3, o4-mini

Better Memory: Larger Context Windows

Fewer Hallucinations

Less Flattery

Higher accuracy in coding, spatial tasks, creative work

Lower cost per unit

Let’s go through each of these improvements and why they matter:

Increased Memory (Context Windows)

Why Memory Matters

A major problem with AI models is that they forget. Long chats lose details and instructions

GPT-5 holds more context. However, memory in the chat application is different than when you use the model in code (using the API)

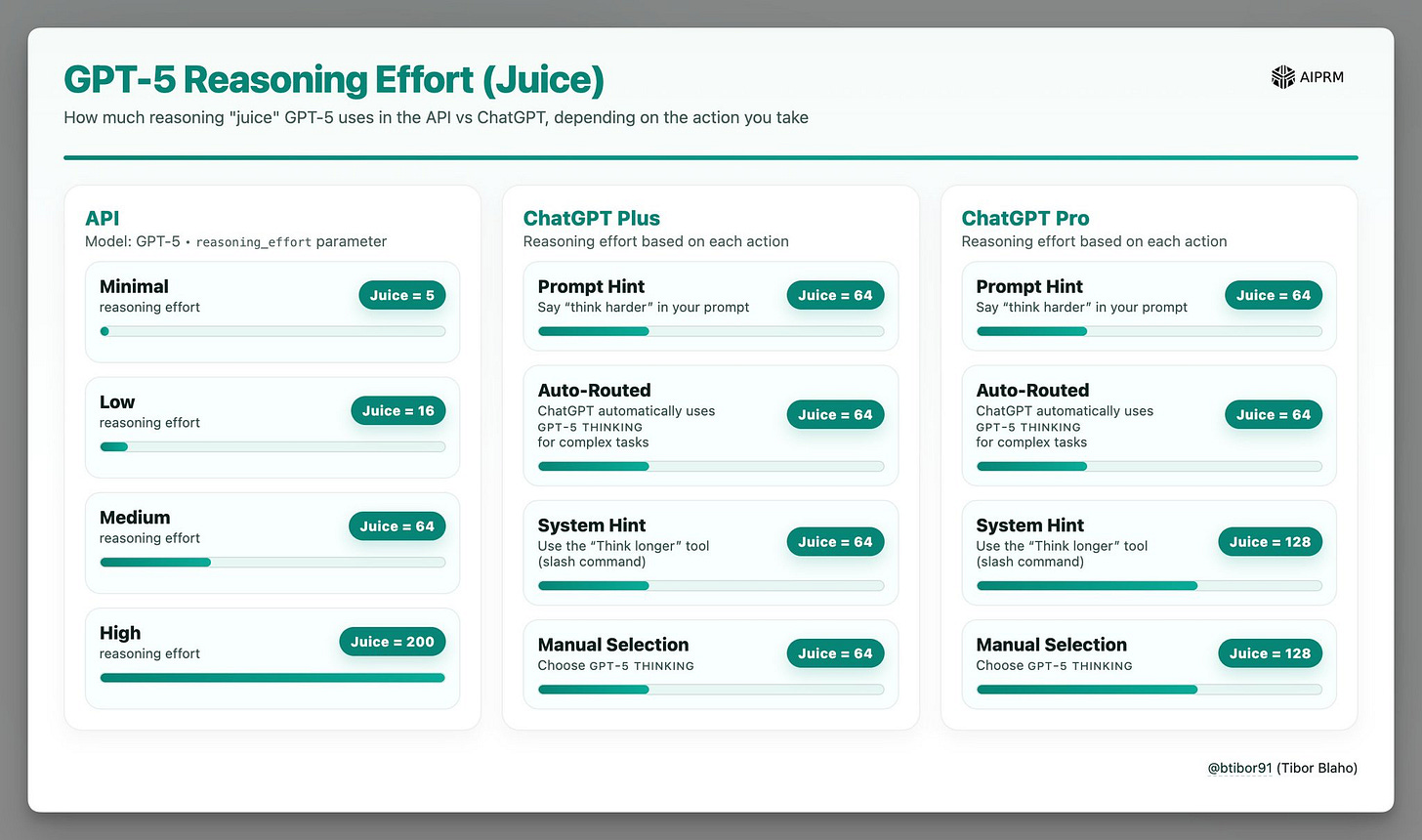

In code, you can set a parameter that decides how much money you want to spend. More money can be spent to increase its reasoning (“juice”) and output length (“yap”). But in the ChatGPT app, OpenAI sets parameters depending on the plan you’re on:

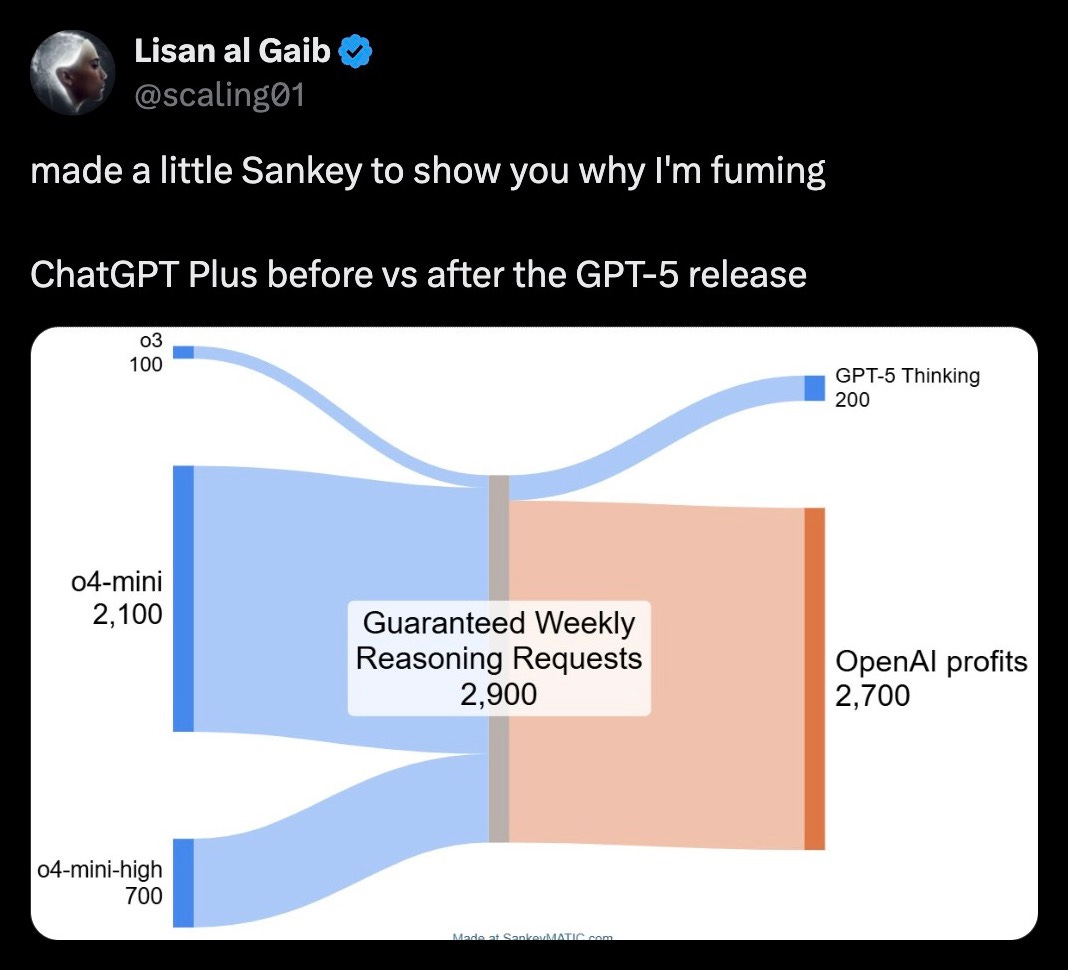

In other words, paid users get better reasoning and memory than free users, even on the same model. And $200/mo Pro users get better reasoning and memory than than the $20/mo Plus users

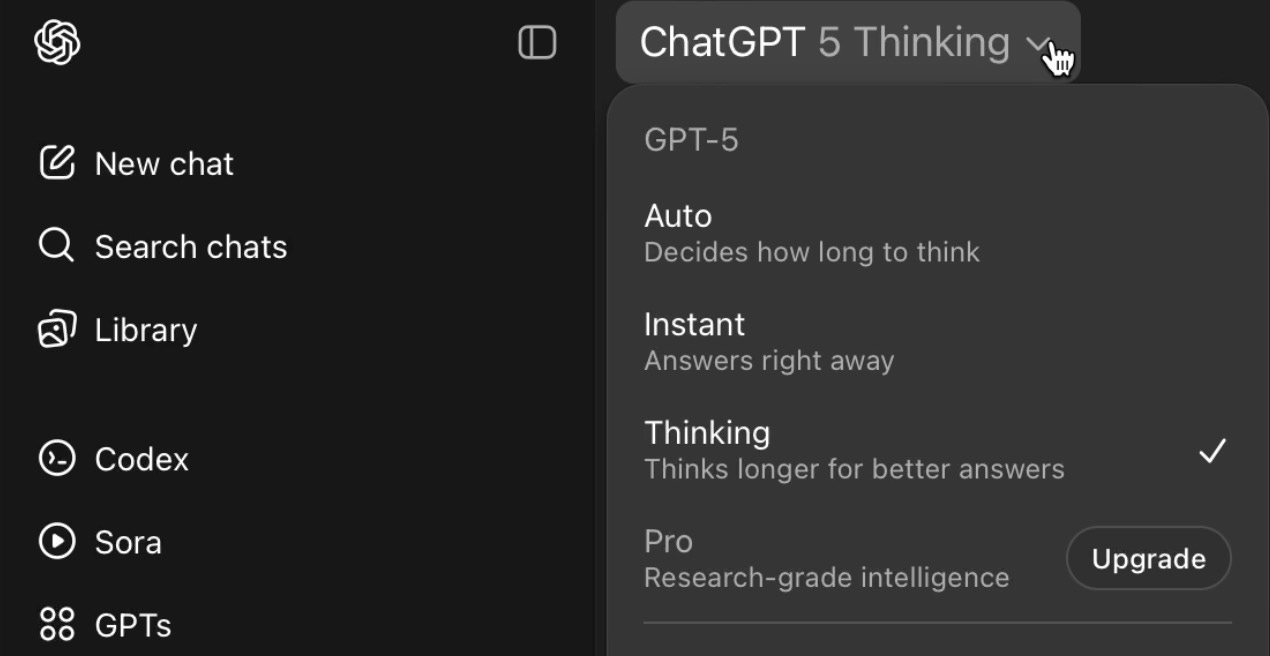

If you want the best option, always use Thinking Mode to get the most intelligence and memory, regardless of which plan you’re on

OpenAI has an incentive to use the dumber “Instant” model to save on costs if you use Auto mode, so you should manually set it to Thinking. I only use Instant for things that don’t require much “intelligence” like summarization or looking up quick facts

Memory compounds quality:

It’ll remember details in extended chats

Stronger instruction adherence

Bigger docs and codebases in context

Better planning

More complex, multi-step reasoning

How Memory Affects AI Agents

Multi-step reasoning is especially important for AI agents. The reason people like agents is because you can let them run loose. It’s like having a seasoned vice president who can make decisions on your behalf. Compare this to an inexperienced virtual assistant who will lose his mind if he encounters a new situation without instructions

The reason agents become useless quickly is because agentic workflows break immediately with ONE mistake. If you let the AI run loose and it makes an incorrect assumption or action, it fails the entire task. This is why it’s important to define the problem

Imagine trying to cook a recipe with one wrong instruction. One mistake will make the entire dish bad, no matter how accurate the other steps are

If you need help setting up an agent, I can walk you through it at hire.bowtiedfox.com

Fewer Hallucinations

One of the major consequences of having better memory is reduced hallucinations. But on top of this, ChatGPT now corrects itself and identifies when it doesn’t have information, resulting in an 80% reduction in “fake news”

If it can’t solve your problem, it will try, run some tests, fail, try to fix, run some more tests, fail again, repeat. After a few attempts, it gives up and explains why it can’t complete the task, and what it tried. Huge!

Self-identifying its mistakes is one of the reasons GPT-5 is excellent with coding problems. Other models get stuck in endless loops of bad reasoning and continue to give you useless output. GPT-5 can identify when it has made a mistake, then backtrack

Yes, there are always risks to getting bad answers with AI. This is especially true with GPT-5 because it follows instructions more strictly. You can get away with poorly defined problems a little better on other models because they’ll run with the ambiguity

But even if ChatGPT makes a mistake, verification is always easier than solving. Remember, it’s much faster to check the correctness of a sudoku puzzle than to solve one from scratch

In any case, my suggestion remains the same: use it to supercharge your learning. ChatGPT is best as a personalized tutor. Use it to explain concepts, ask questions, and explore unknown unknowns. If you don’t trust it, then use it to find concepts that you can Google, or provide it some source material to summarize, explain, and ask questions

Sly Fox Tip: I don’t think ChatGPT hallucinations are something worth worrying about, especially if you provide it the right context and detailed instructions. However, many people continue to believe ChatGPT is a random word generator. They still defer to domain experts. You can capitalize on this in two ways: 1) use your reputation as a domain expert to sell a productized service with defined recurring deliverables, but use AI to automate the work and increase your margins, or 2) develop a narrow AI wrapper with an credible interface, like a CalAI “calorie counter” app for Ozempic users

AI Personality and Sycophancy

GPT-5 stopped indiscriminately agreeing with users. It’s one of the few models that will push back and correct your work

I personally think this is excellent. But there was enormous social media backlash from people who became attached to the personality of the previous ChatGPT-4o model. Turns out people are not just susceptible to sycophancy, but demand it

I completely forgot that I’m not the only one using ChatGPT. There are software engineers like me using it in coding tools, and some engineers baking it into their AI apps, but the non-technicals are using for therapy and AI psychosis

Redditors, lol. So because of this insanity, OpenAI brought back the legacy 4o model

The implication of this is that you can build a moat around AI model personality. Users will become more attached to a model based on 1) its training data 2) its system instructions and 3) memories through the user’s conversations. AI companions like Replika still support models from 5 years ago because many users prefer them. Even though the platform has millions of users, enough people remain attached to the older versions to justify ongoing support

The more data the user gives, the more attached they’ll become. It’s the same as being attached to relationships in real life. We don’t swap our partner, friends, kids, pets, or OF girls because there is a “better” or “smarter” one

This “attachment” to models is here to stay because there’s currently no easy way to export and import AI chat conversations. And there’s no incentive for model providers to offer a way to do it, either. So the tools people use are likely to be the tools they stick with, even if they run on older tech. Turning your product into a habit is a moat

Note: There are also slight differences between model datasets that affect personality. For example, OpenAI has exclusive rights to Reddit, xAI with 𝕏/Twitter, Google with YouTube, and Meta with Facebook and Instagram. AI models will mirror the culture of the social media they grew up on

Cost Stack for Coders

GPT-5 for Quality: Maximum reasoning, agents, and quality over cost.

GPT-5 Mini for Value: Everyday development and chat-with-documents (RAG), cheaper/faster, higher rate limits, older knowledge cutoff (fine if you rely on web or tools)

GPT-5 Nano for Speed/Scale: Bulk summaries, labeling, quick replies at scale, ultra cheap vision

These recommendations are only relevant if you’re using an LLM in production. If you want to use GPT-5 to vibe code, use Codex CLI and set model_reasoning_effort = “high”. You’ll be shocked at the performance, and it comes included with your $20/mo ChatGPT Plus plan with high usage limits

My Predictions for AI

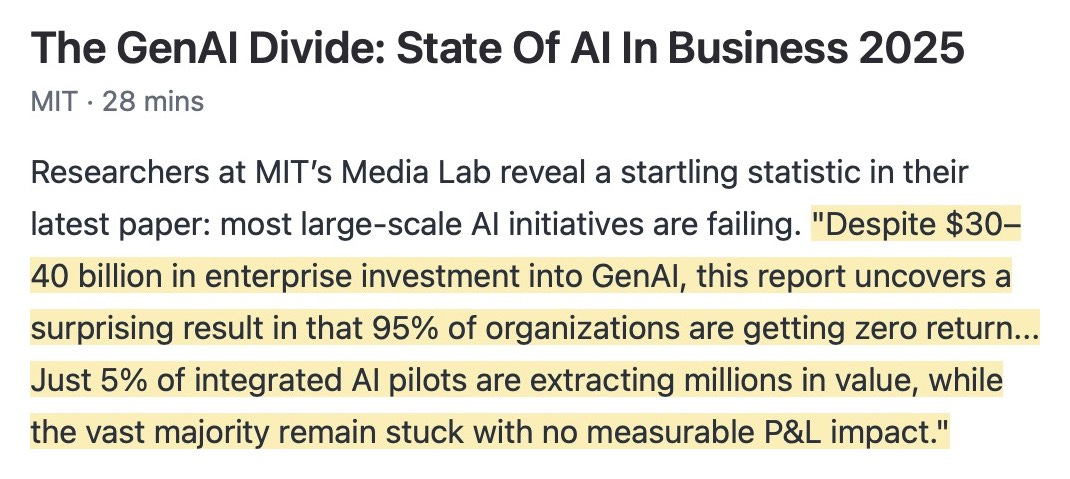

Adoption Reality

Why hasn’t AI taken over the world already? Because a blank canvas is still hard to work with if you don’t know what to do with it. Amara’s Law holds: “We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run”

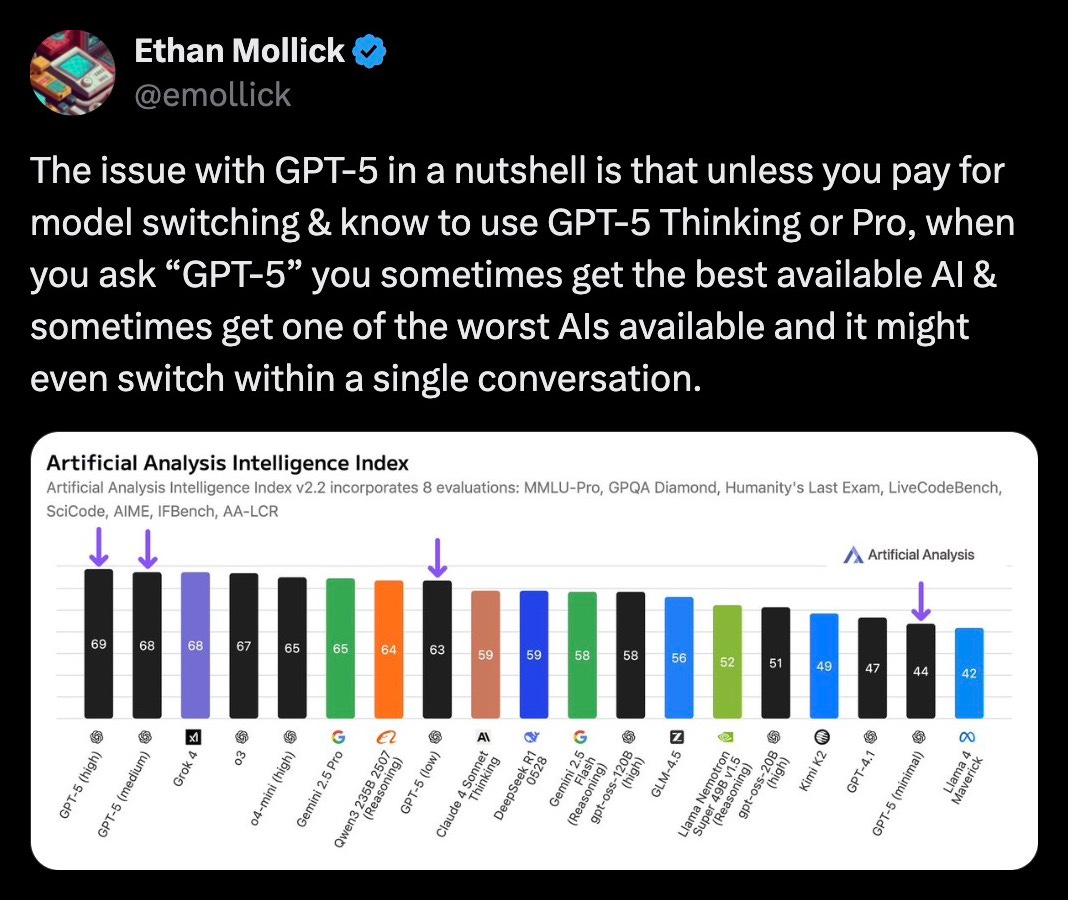

Here’s an easy example. Most users don’t know the difference between AI models, which is why DeepSeek (Chinese GPT) exploded in popularity in January. OpenAI had users default to 4o, the Instant model, whereas DeepSeek defaulted to a Thinking model

The same thing is happening now: hundreds of millions just jumped from last year’s 4o model to a state-of-the-art (SOTA) Thinking model

Yet there’s still a large amount of the population NOT using these tools at all. And many existing users aren’t even using it correctly

Which means… even if all AI progress stalls tomorrow, there are still years where we can apply current AI capabilities to current problems

There is much to be done because companies are slow to adapt. Existing products are adding AI on top of their existing features instead of making their features AI-native from the start

Take email for example. What is the difference between AI added on top of email versus email built with AI in mind? What I imagine is the following:

AI added: Gmail. Think of “write with AI.” It makes drafting easier, but you still do the writing. All the AI features from smart reply, thread summary, and tone edits are an optional assistive layer that do not change the flow. Human drives, AI suggests

AI native: Email client that auto-triages by intent, drafts replies, schedules meetings, follows-up, closes loops. Human review only when uncertain. AI drives, human supervises

AI native is automated when confidence is high, it’s a closed loop where outcomes can be observed and retried, it can touch anything from calendar/CRM/docs/tasklist, it learns from user behaviour, and there’s a clear incentive to switch from current tools to save time

This is why there’s still room for disruption using AI native products. Gmail is already too mature. There are too many users, too much code, too many existing features and habits. They can’t afford to mess with it too much because of brand risk. They also can’t create a new product because they risk revenue cannibalization and support load

Increased Expectations Over Time

Yes, there’s space for AI native products, but you need to move fast to establish yourself as the go-to. In other words, everyone knows Usain Bolt is the fastest man in the world, but who’s number two? Nobody cares.

And this is the reason I mentioned the adoption rate. It decides how difficult it will be to get users

Expectations increase as adoption increases. And it’s not just about shiny new AI features. Think of how these new GPT-5 updates will affect users. Here’s an example: everyone will get accustomed to reduced hallucinations, which means people will be less forgiving of mistakes in your AI apps

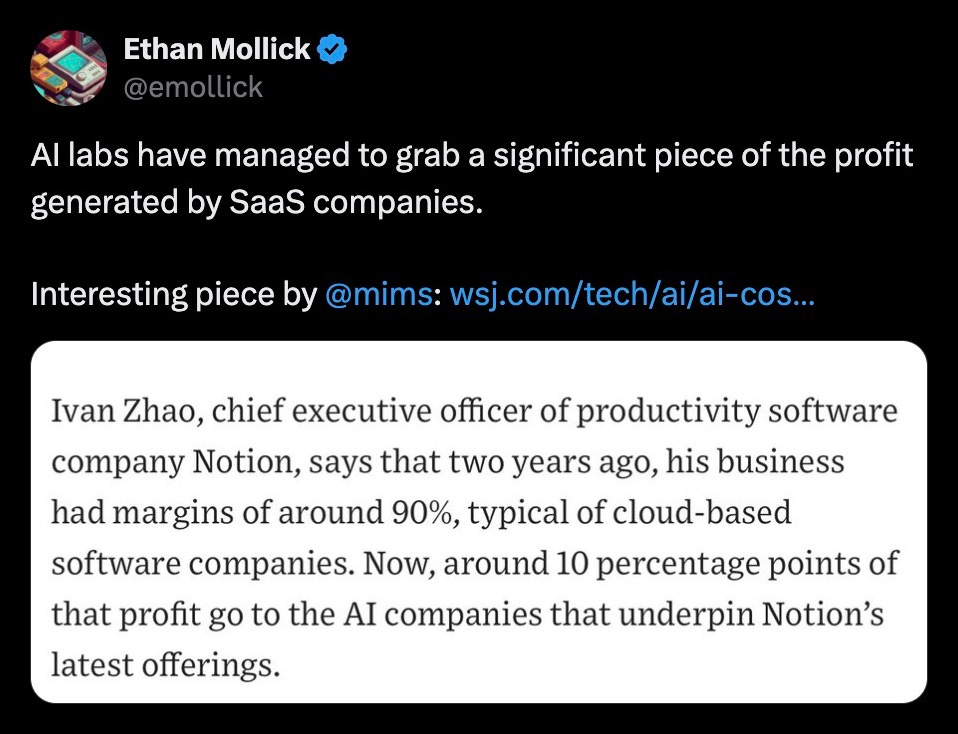

There’s also consequences to product development. Increased memory means increased costs (more tokens processed means more usage fees), more processing time means slower responses, and this also means more privacy risks because more data in context means more exposure potential

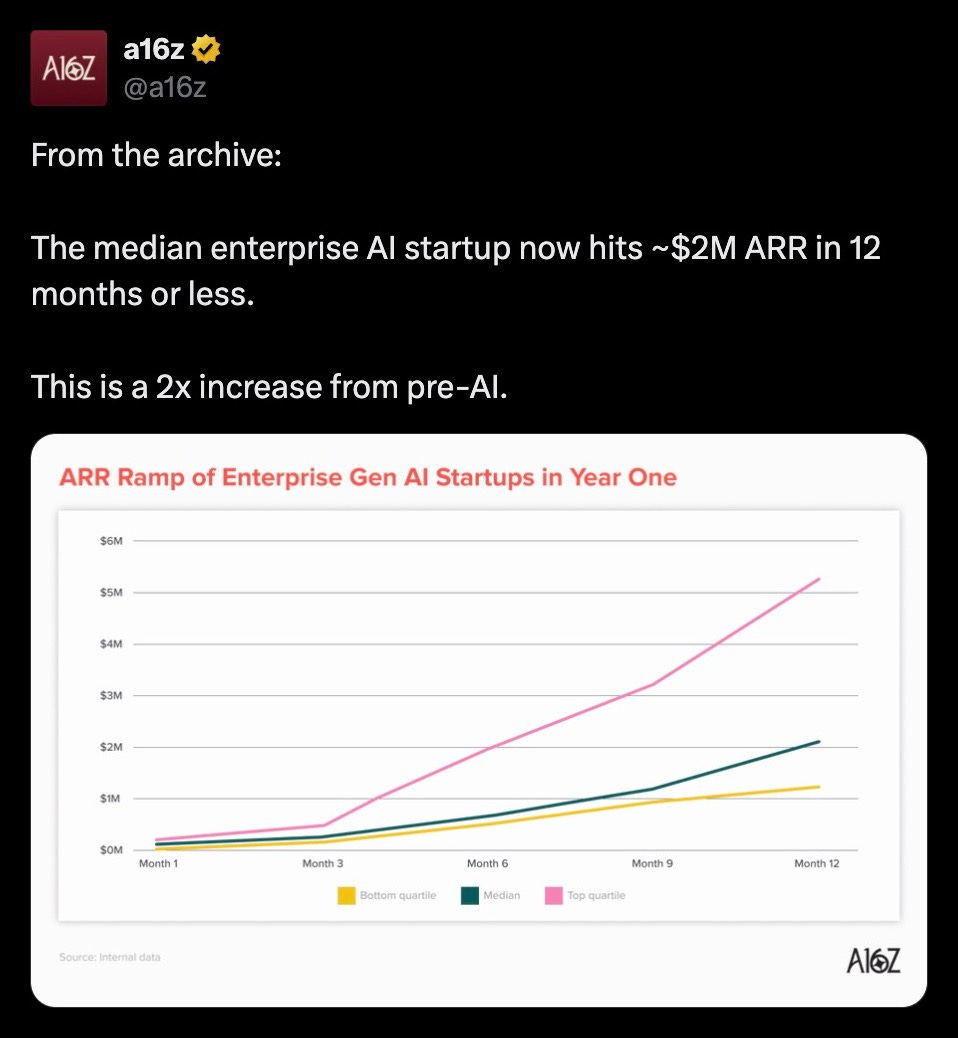

We may see a greater race to the bottom. Some are going to burn their margins to subsidize as much reasoning power as possible, raising user expectations and making it harder for people using usage-based pricing or cheaper models

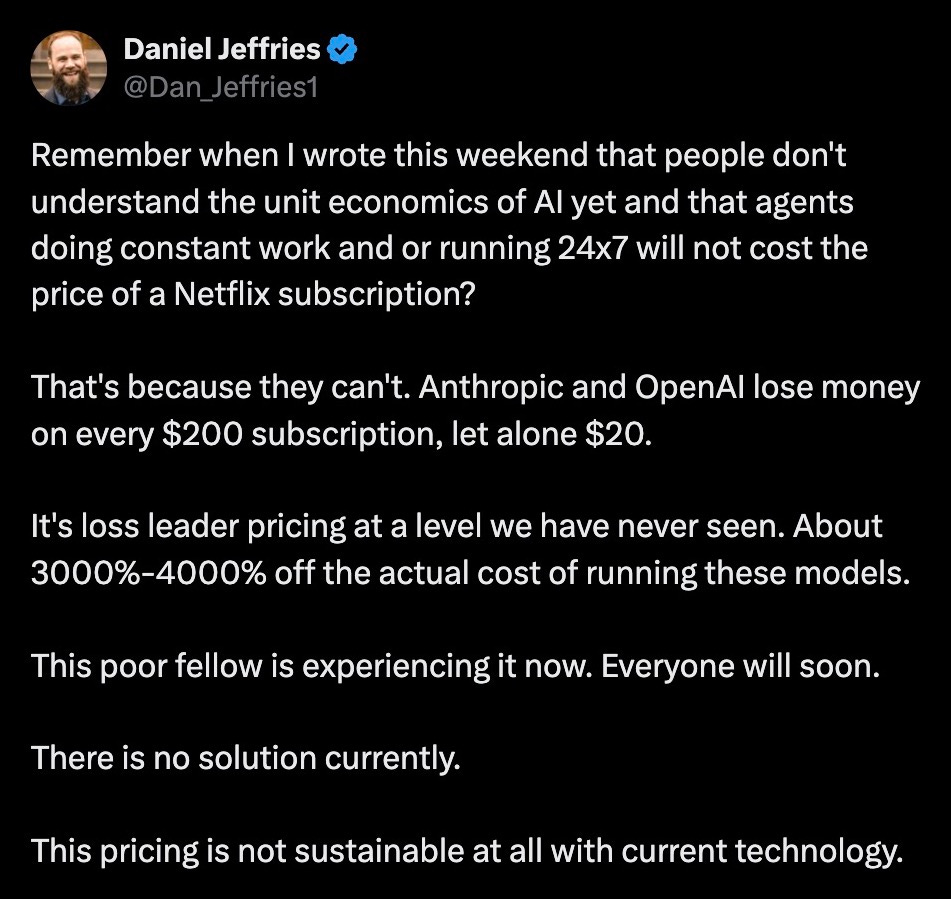

In fact, the major companies are already eating massive losses just to make it available at current prices

But I don’t expect this pricing to last forever. Companies are shifting their focus from AI model research to product development and profits. This means finding ways to cut costs wherever they can

Here’s an example. OpenAI cut -93.1% of Plus plan messages after releasing GPT-5 (now fixed after social media outcry)

What does this mean? Intelligence will get cheaper, but people with money will still have the best access

So, as I’ve said previously, create a product now while it’s still easier, before user expectations increase. Just like how people were fine with ugly websites, low-resolution YouTube videos, and indie games in the past, but now there’s higher expectations for aesthetics. Money loves speed

Quick Implementation Examples

So how do you actually use AI to make more money? Here’s three easy wins that I shared on hire.bowtiedfox.com:

Map customer pain points. Explain what you’re selling, like a weight loss pill. Then, ask ChatGPT to “extract pains, desired outcomes, triggers, and objections.” Even better if you can provide some data, like product reviews. Use the output to combine pain + proof + hook for ads. Use this to increase your CTR

UGC script generator. Explain your product’s benefits, the persona, and the platform you’re on. “Weight loss for single moms on TikTok.” Have it output a 30-second storyboard hooks, CTA, and lines. Get multiple scripts with variants for A/B testing. Use this to impove your scroll-stop rate or 3-second view rate

Cross-sell suggestions. Have it check out your catalog, margins, and co-buy graph. Use it to create bundles and in-cart bundle add-ons. Could even create some copy for why it pairs well. Use this to raise your AOV

Work Has New Bottlenecks

There’s opportunity to make a lot of money now because the world is changing. People pay to get help with change. And the future of work is looking different not just because of the change in tools, but also the bottlenecks

Work is less about raw output and now more about defining the problem, iterating, and verifying. This reminds me of Amdahl’s Law: the overall speedup of a system is constrained by its slowest processes. Which means AI may not replace you, but a person using AI will

Think about it this way. You can generate as much AI text as you want, but you still have to read that text. This might be fine for an email, but what happens when you need to read an entire AI-generated book?

Does this mean writing skills will be less valuable than reading skills? Does this also apply to other kinds of work? How can you capitalize on these changes?

Conclusion: pick a niche, build early, ship AI-native, capture habits before the bar moves

More breakdowns at bowtiedfox.com

Hands-on help and weekly case studies at hire.bowtiedfox.com

-Fox

𝕏 / Twitter @BowTiedFox

Disclaimer: None of this is to be deemed legal or financial advice of any kind. These are *opinions* written by an anonymous group of Ex-Wall Street Tech Bankers and software engineers who moved into affiliate marketing and e-commerce.

Old Books: Are available by clicking here for paid subs. Don’t support scammers selling our old stuff

How ETH is Staked: Covered (here)

Crypto: The DeFi Team built a full course on crypto that will get you up to speed (Click Here)

Crypto Taxes: We have a suggested Tax Partner and 25% discount code, for information see this post. Crypto Tax Calculator (same as always). You can access CTC HERE and get 25% off your first year for being a BTB subscriber. Yes, this also operates as a ref link. (BTB25) the discount is at no cost to you.

Security: Our official views on how to store Crypto correctly (Click Here)

Social Media: Check out our Instagram in case we get banned for lifestyle type stuff. Twitter will be for money